History of Computers and Its Development from Time to Time

Most of the activities carried out by humans in this modern era are carried out using computers. Especially during a pandemic like this, where all activities are transformed completely online.

Computer equipment which was originally intended only as a means of supporting work has now become a necessity that must be owned by the community.

Who is Charles Babbage and where is his brain

Before being equipped with sophisticated features like today, the development of computers began with a simple innovation that was born in the 1800s.

The emergence of the first computer

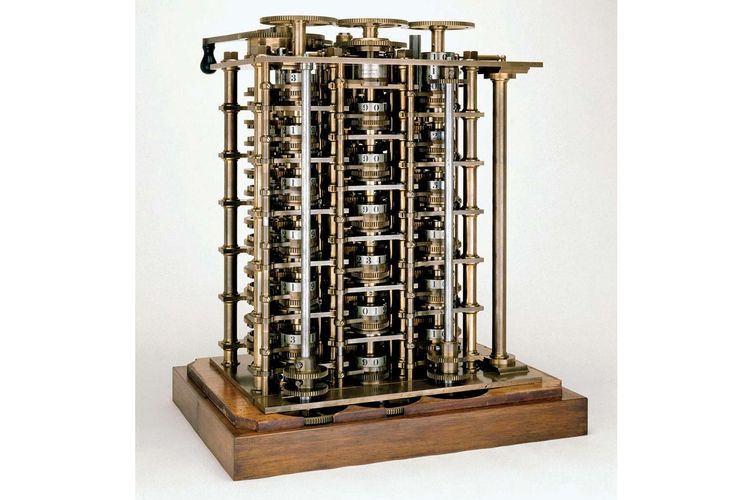

The first computer was invented in 1822 by an English mathematician, Charles Babbage. Initially, Babbage intended to create a steam-powered calculating machine that could calculate tables of numbers.

The machine he later named “Difference Engine 0” and is predicted to be the world’s first computer. The form of Difference Engine 0 itself is very much different from most modern computer models today.

However, the working principle of this machine is the same as a modern computer, which is capable of calculating numbers or computing.

Until 1890, an inventor named Herman Hollerith designed a card system that was able to calculate the results of the US census conducted in 1880.

Thanks to this innovation, Hollerith managed to save the government budget of 5 million US dollars. Furthermore, Hollerith continued to develop his potential in the realm of technology until he finally succeeded in establishing the IBM computer company.

The forerunner of the digital computer

The forerunner of the first digital computer was developed in 1930. It was Alan Turing who first developed the machine.

He is a successful mathematical researcher developing a machine that can execute a set of commands.

Thanks to his contributions, the machine was later given the name Turing machine, including a simulation of his ideas called the Turing test.

The first digital computer was developed by Konrad Zuse, a German mechanical engineer. Before the second world war broke out, Zuse built the first programmable digital computer called the Z1.

In 1936 in his parents’ living room in Berlin, he assembled metal plates, pins, and created a machine that could perform addition and subtraction calculations.

Although these early computer models were destroyed during World War II, Zuse is credited with creating the first digital computer.

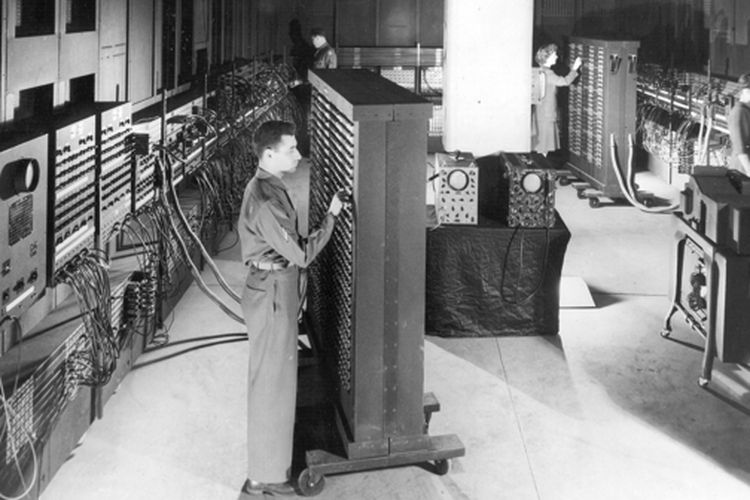

During the second world war, in 1943, John Mauchly succeeded in creating a machine called the Electronic Numerical Integrator and Calculator (ENIAC).

ENIAC was originally created to assist the Army in predicting attacks. ENIAC itself is equipped with analytical capabilities that can calculate thousands of problems in seconds.

The ENIAC weighed up to 30 tons and required 457 square meters of space to house the machine.

This is due to the many supporting components that ENIAC has, such as 40 cabinets, 6,000 switches, and 18,000 vacuum tubes.

The birth of programming languages

In 1954, a programming language was created for the first time by a female computer scientist named Grace Hopper. This programming language called COBOL is here to help computer users in conveying commands in English.

Because previously, computer users could only give instructions to the computer using a collection of rows of numbers. Since then, programming languages have developed along with the evolution of computers.

Subsequently, a new programming language called FORTRAN was created, which was developed by the IBM programming team led by John Backus in 1954.

As a technology-focused company, IBM’s ambition is to lead the global computer trend. The company began creating a device called the IBM 650 to begin mass marketing.

Until 1965, computers were known as a supporting tool for mathematicians, engineers, and the general public. The computer is known as Programma 101.

When compared to ENIAC, the size of the Programma 101 is much more compact. This computer is the size of a typewriter, weighs 29 kg, and is equipped with a built-in printer.

Personal computer

The 1970s can be regarded as the era of the birth of the personal computer. Marked by the emergence of the Xerox Alto, a personal computer that can run commands such as sending e-mail and printing documents.

One thing that is most different from the Xerox Alto is its design which already resembles a modern computer. Because, this computer is equipped with a mouse, keyboard, and screen.

In the same year, several major discoveries also occurred. Some of them such as the creation of diskettes, ethernet, and Dynamic Access Memory (DRAM) chips.

Meanwhile, Apple was founded in 1976 by Steve Jobs and Steve Wozniak. The two also introduced the Apple I, the first single-circuit computer.

The personal computer design was then perfected by IBM, through a device called Acorn. This computer is equipped with an Intel chip, two diskette slots, a keyboard, and a color monitor screen.

Various other inventions were later discovered in 1983. The official CD-ROM was present as a storage device that was able to accommodate data up to 550 MB.

The CD-ROM was later established as the general standard for computers.

In the same year, Microsoft officially introduced Word, followed by Apple which released the Macintosh in 1984. The Macintosh is known as the first computer that can be controlled using a mouse and is equipped with a graphical user interface.

As if not wanting to be left behind, Microsoft also launched a Windows operating system that offers the advantages of multi-tasking and is equipped with a graphical interface.

Apple then spawned a new innovation called the Powerbook, a series of portable laptops that can be taken anywhere.

2000’s computer

Entering the 21st century, the pace of development of computer equipment is increasing rapidly, along with technological developments.

The use of diskettes and CD-ROMs has begun to be excluded by more sophisticated portable storage media, namely USB drives.

Meanwhile, Apple is increasingly releasing the latest innovations through an operating system called Mac OS X. Microsoft as a competitor has also launched a more modern operating system, Windows XP.

Apple managed to lead the market trend thanks to the presence of iTunes. Less than a week after its release, this music player application has been used to download more than 1 million song titles.

Several applications such as YouTube, Mozilla Firefox, and MySpace are also present in this modern era.

Until 2006, the trend of using laptops increasingly mushroomed in society. This is also driven by the presence of the MacBook Pro laptop device introduced by Steve Jobs.

The development of the MacBook was continued by the latest generation of MacBook Air in 2007. In the same year, Steve Jobs also introduced the iPhone for the first time, followed by the iPad in 2010.

Internet of Things

The year 2011 was marked as the year of the birth of various inventions of the Internet of Things (IoT). The Nest Learning Thermostat that was present this year became known as the world’s first IoT device.

Furthermore, various other IoT products are mushrooming in the market. Call it like the Apple Watch which was present in 2015.

For the first time, Apple also announced iPadOS, an operating system specifically for the iPad.